Are you looking for ways to ensure your business stays ahead in the competitive marketplace? One way to do this is by efficiently managing and analyzing vast datasets. If you use Amazon Redshift, a cloud-based data warehouse, and would like to enhance your analytics capabilities further, consider Elasticsearch.

Connecting Redshift to Elasticsearch allows you to leverage Elasticsearch’s real-time analytics and search features. This can help you with real-time monitoring of operations, complex analytics across diverse datasets, and improved reporting. The outcome is a dynamic analysis of your Redshift data for improved decision-making and responses to market fluctuations.

Here, you will learn two methods to load data from Amazon Redshift to Elasticsearch. But first, let’s start with a brief overview of both platforms!

Overview of Amazon Redshift

Amazon Redshift is a cloud-based petabyte-scale data warehouse service provided by AWS. It is a fully managed service that can store and query large volumes of data. Redshift uses machine learning and massively parallel processing (MPP) to query data, which it stores in columnar format, making it faster than traditional data warehouses.

Some key features of Amazon Redshift are:

- Fast Query Performance: Redshift excels at running queries faster through columnar storage, data compression, and zone mapping. The data in Redshift is also distributed across nodes based on frequently queried columns for optimal performance. Additionally, regular vacuuming and analyzing tables also increases the querying speed.

- Scalability: Redshift can handle petabyte-scale data. You can add or remove nodes in the Redshift cluster to adjust the computing power based on workload. It also supports concurrency scaling, providing thousands of concurrent users with faster query results.

- Flexible Querying: Amazon Redshift allows you to execute queries within the console or connect SQL client tools, libraries, or BI tools of your choice. The Query Editor on the Amazon Web Services console provides a powerful interface for executing SQL queries on Redshift clusters. It also lets you view the query results and execution plan for queries executed on compute nodes adjacent to your queries.

What Is Elasticsearch?

Elasticsearch is an open-source search and analytics engine. You can use Elasticsearch to store, search, and analyze large volumes of data in real time. It is based on the Apache Lucene library and developed in Java to support various applications in different languages.

Elasticsearch is based on three components: documents, index, and inverted index. Documents are basic units of information in Elasticsearch, and they can be considered as a row in a relational database. Data within the document can be updated, extracted, and transformed. The index is a collection of documents with similar characteristics; it is like a table in a relational database.

Indexing involves defining the namespace and document structure for data. This enables efficient searching in Elasticsearch. The document’s data stored in compressed form is known as an inverted index. It consists of lists of terms that are words in the form of text.

All the documents sent to Elasticsearch are stored in the Apache Lucene. The library then stores this data in an inverted index. For querying the stored data, it divides it into multiple shards, which are then distributed into multiple clusters of nodes. This enables horizontal scaling, which makes the querying faster.

Some key features of Elasticsearch include:

- Search Functionalities: Elasticsearch excels at searching large volumes of full-text-based data in structured or unstructured form. It also allows you to filter and refine searches based on specific criteria.

- Recovery Mechanism: In case of hardware failure, Elasticsearch automatically replicates data. Within an index, each document is associated with a primary shard. During a hardware or node failure, the primary shards are copied as replica shards to prevent data loss.

- Analytics Capabilities: Elasticsearch can effectively aggregate and calculate data for analytics. It also supports time series data, enabling it to store and analyze time-based data.

Easy Ways to Connect Redshift to Elasticsearch

Here are two reliable methods you can use to load data from Redshift to Elasticsearch:

- Method 1: Using Estuary Flow to achieve Redshift to Elasticsearch integration

- Method 2: Using custom scripts to load data from Redshift to Elasticsearch

Method 1: Using Estuary Flow to Achieve Redshift to Elasticsearch Integration

Estuary Flow is an efficient cloud-based data ingestion platform for real-time integrations. It helps build efficient data pipelines that can extract data from multiple sources to load into destinations.

Let’s look into the steps involved in using Estuary Flow to connect Redshift to Elasticsearch. Before you get started, make sure the following prerequisites are taken into consideration before you proceed to connect Redshift and Elasticsearch:

Step 1: Configure Redshift as the Source

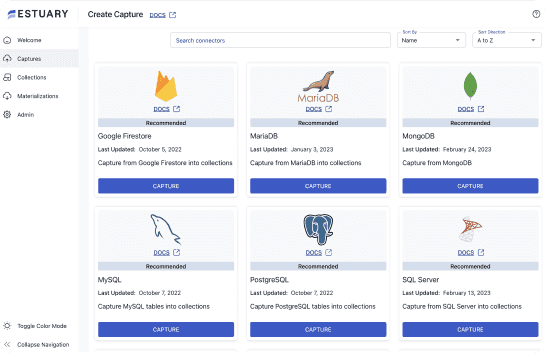

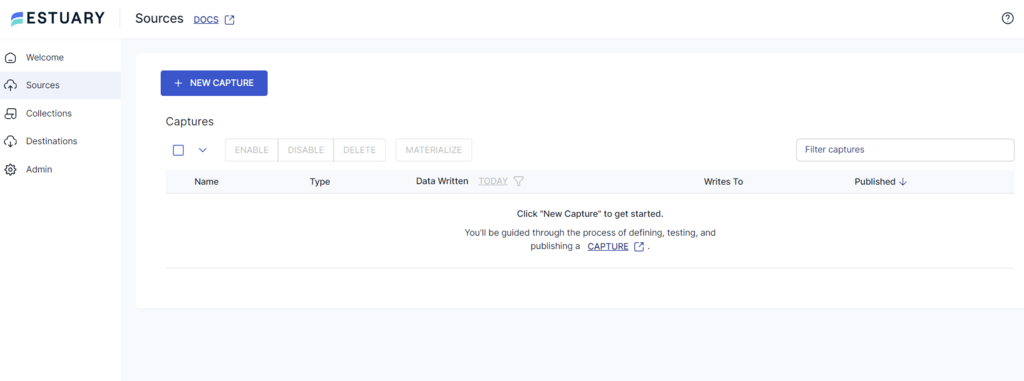

- Sign in to your Estuary account and select Sources from the main dashboard.

- On the Sources page, click on + NEW CAPTURE.

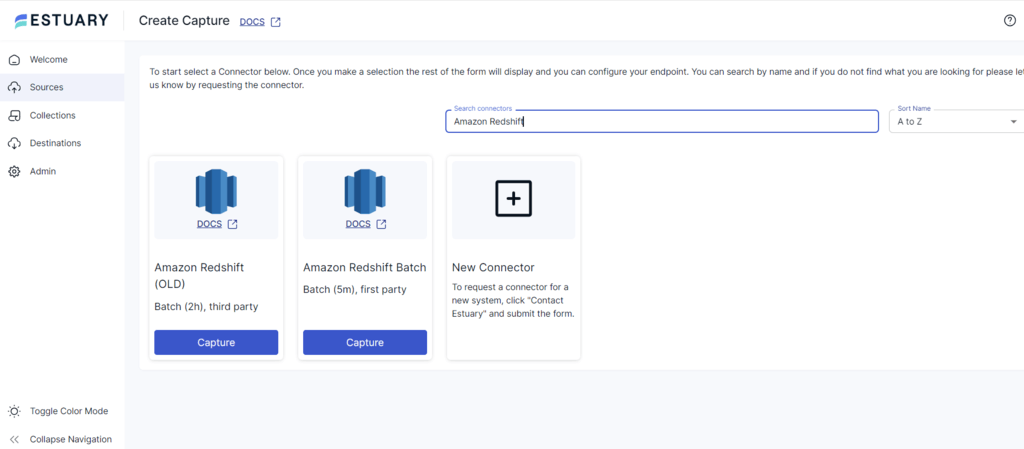

- In the Search connectors field, type Amazon Redshift. Once it appears, click on the Capture button of Amazon Redshift Batch.

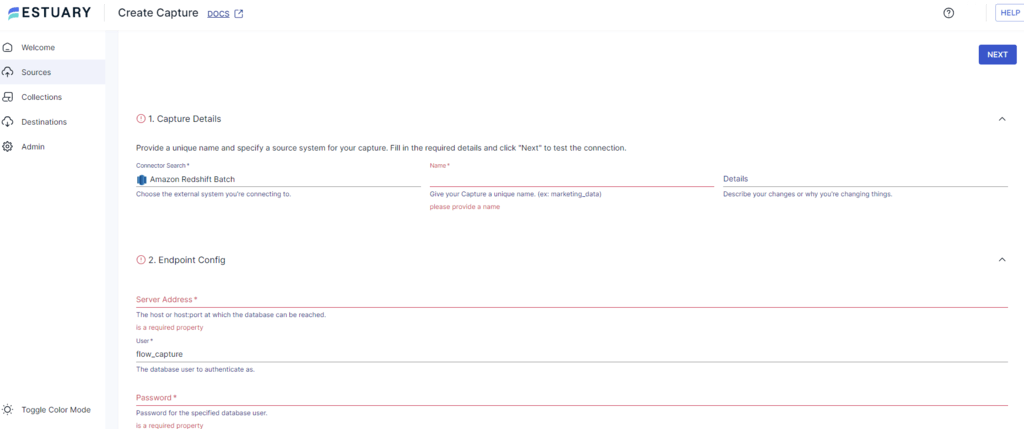

- On the Create Capture page, enter credentials such as Name, Server Address, and Password. Click NEXT > SAVE AND PUBLISH.

The Amazon Redshift connector will capture data from your Redshift cluster into Flow collections.

Step 2: Configure Elasticsearch as the Destination

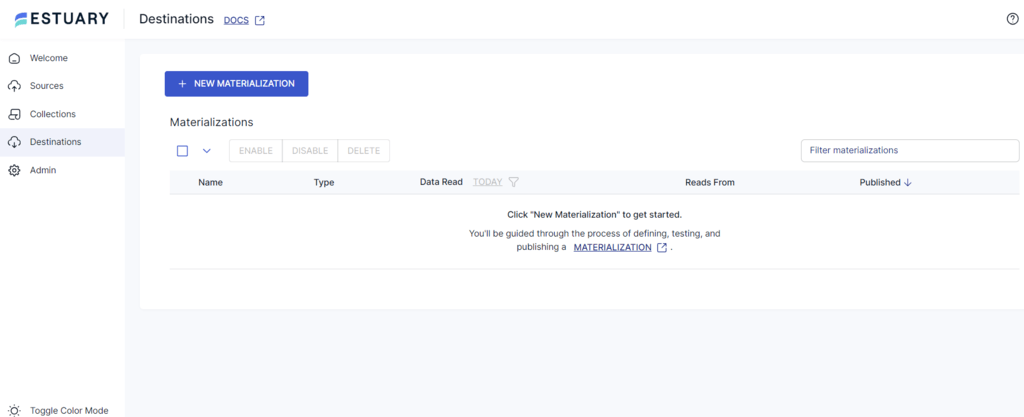

- After setting up Redshift as the source end of the ETL pipeline, you must configure Elasticsearch as the destination. To do this, go to the main dashboard and click on Destinations from the left-side panel.

- Click on + NEW MATERIALIZATION on the Destinations page.

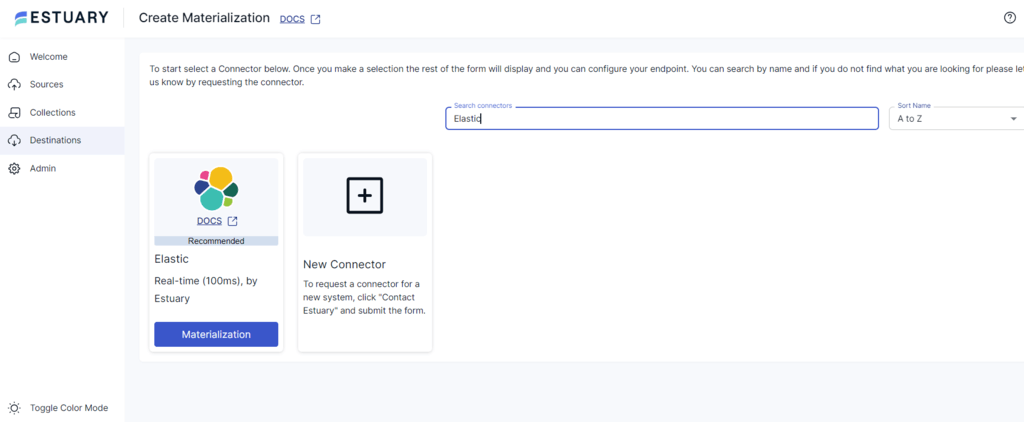

- In the Search connectors box, type Elastic. When you see the connector in the search results, click on its Materialization button.

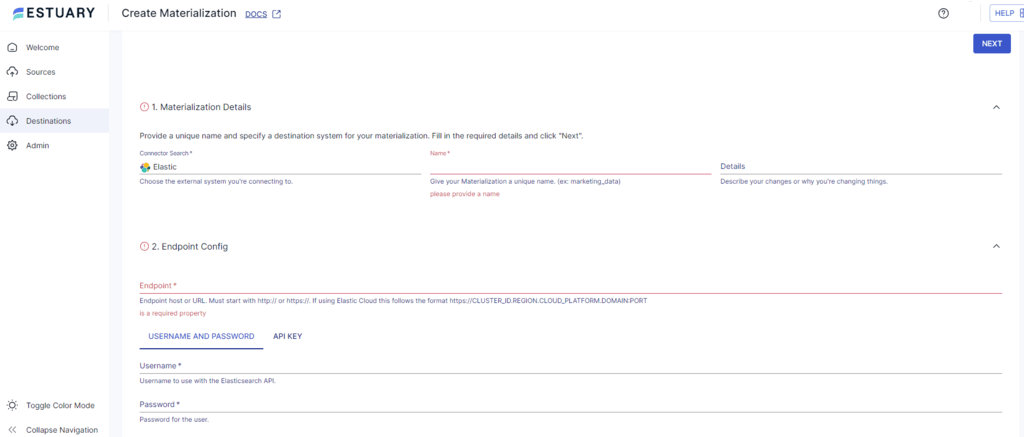

- On the Create Materialization page, enter your Name, Endpoint, Username, and Password.

- While collections added to your capture will automatically get added to your materialization, you can use the Source Collections section to link a capture to your materialization.

- Click on NEXT > SAVE AND PUBLISH.

The Elasticsearch connector will materialize Flow collections into indices in an Elasticsearch cluster.

Key Features of Estuary Flow

Here are some key features of Estuary Flow that make it a fitting choice for your data integration needs.

- Readily Available Connectors: Estuary Flow provides 300+ pre-built connectors for cloud storage platforms, data warehouses, data lakes, etc. This simplifies the data extraction and loading processes.

- Workflow Orchestration: It has the capability to orchestrate complex workflows with scheduling, error handling, and more. You can even define workflows that automate data integration from extraction to loading.

- Data Mapping Tools: Estuary Flow provides tools for data mapping and synchronization between source and destination. These include incremental data updates, change data capture, and conflict resolution features.

- Security: Encryption, access control, and auditing are some other features of Estuary Flow that ensure your data is protected during the process of data integration. This ensures data quality and integrity.

Method 2: Using Custom Scripts to Load Data From Redshift to Elasticsearch

You can use custom scripts to extract data from Redshift and load it into an S3 bucket and then into Elasticsearch. Let’s look into the steps to do this.

Step 1: Load Data from Amazon Redshift to S3

In this step, you can use the SQL ‘UNLOAD’ command to export data from Redshift to S3.

plaintextUNLOAD (‘SELECT* from table’)

To ‘s3: // your-s3-bucket / your-folder/’

CREDENTIALS ‘aws_access_key_id=your_access_key_id; aws_secret_access_key’

DELIMITER ‘,’ ALLOWOVERWRITE;For this, you must:

- Replace table with your Redshift table name.

- Provide the details of the S3 bucket where you will be loading the data.

- Provide your AWS credentials to access the S3 bucket.

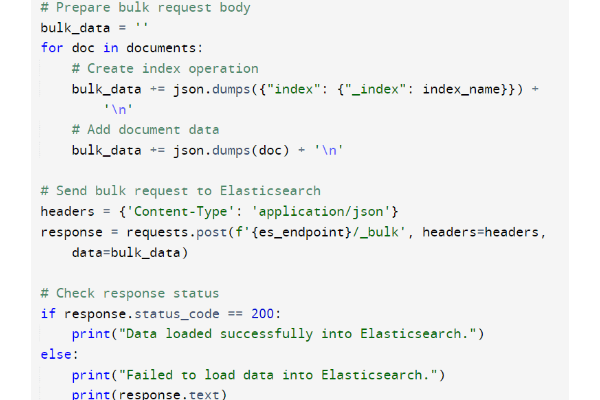

Step 2: Load Data from S3 to Elasticsearch

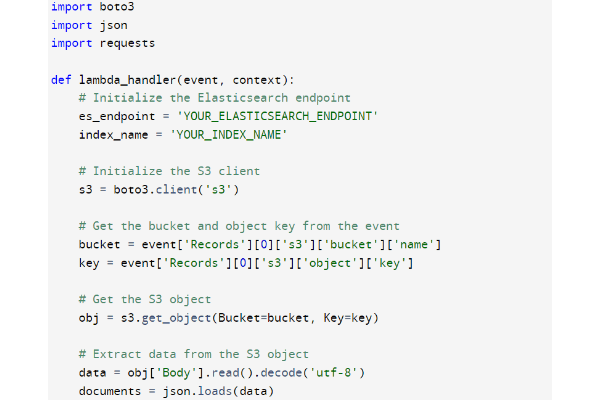

In this step, you use a Python script to load data from S3 to Elasticsearch.

Here, the boto3 and Lambda functions can be used to export data from the S3 bucket to Elasticsearch. This completes the custom method of loading data from Amazon Redshift to Elasticsearch.

Limitations of Using Custom Scripts to Load Data from Redshift to Elasticsearch

There are some limitations of using a custom script to export data from Redshift to Elasticsearch, including:

- Data Consistency: Maintaining data consistency between Redshift and Elasticsearch can be difficult. Additional efforts are required for incremental updates and ensuring synchronization between the systems.

- Performance: Data processing complexity, network latency, and resource availability are factors that affect the performance of custom scripts. You would need to optimize your custom scripts often to improve its performance.

- Scalability: Custom scripts are prone to errors when handling large volumes of data. You should customize your scripts to handle increased data volumes.

- Cost: Maintaining and monitoring custom scripts is expensive, especially since you will require a team of skilled and well-paid software developers.

The Takeaway

Data loading is an important process in the world of analytics. This guide describes two comprehensive methods for loading data from Redshift to Elasticsearch: a custom script and no-code SaaS tools like Estuary Flow.

With over 300 in-built connectors, automated workflow, scalability, and security, Flow presents itself as a powerful tool for real-time ETL processes. You can employ these features to make your analytics easier.

Want to load data from multiple sources to a destination? Estuary Flow can help simplify the entire process; all it takes is a few clicks to get your integration pipelines up and running. Sign up for Estuary Flow to leverage its benefits!

FAQs

- How is data stored in Redshift?

Amazon Redshift stores data in columnar format. Each data block holds values from a single column of the table. This allows Redshift to read only those columns that are required for query execution, improving performance significantly.

- What data type does Elasticsearch support?

Elasticsearch supports different data types, such as the core data type, which includes string, date, numeric, boolean, and binary. It also supports complex data types, such as arrays, objects, nested, and geo data types.

- What are some of the prominent use cases of Elasticsearch?

Elasticsearch is a popular analytical and search engine for storing, searching, and analyzing structured and unstructured data. It is commonly used for full-text search, log analytics, business analytics, security, and operational intelligence.

Start streaming your data for free

Build a PipelineAbout the author

Rob Meyer is the VP of Marketing of Estuary. He has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.