Whether you're planning a one-off data migration from MySQL to PostgreSQL or looking to continuously replicate data between these two databases, there’s much to consider and several ways to approach the task.

In this post, we’ll provide a step-by-step tutorial on how to migrate MySQL to PostgreSQL using Estuary Flow, allowing you to migrate historical data and continuously capture new data using change data capture (CDC). We’ll also explore additional popular methods for converting MySQL to PostgreSQL.

Already familiar with MySQL and PostgreSQL? Skip directly to the migration methods to get started.

But first, let’s cover some essential prerequisites. To ensure a successful migration, it’s important to understand the key differences between MySQL and PostgreSQL and define your migration goals. Let's start with a brief (re)introduction to MySQL.

What is MySQL?

MySQL is a widely-used relational database management system (RDBMS) based on SQL. Owned by Oracle, it's a critical component in many technology stacks, enabling teams to build and maintain data-driven services and applications.

Advantages of Using MySQL

- Open-Source and Free: MySQL is open-source, offering enterprise-level support and immediate deployment without cost.

- Cross-Platform Support: It supports popular operating systems like Windows, Linux, and Solaris.

- Scalability: MySQL can handle more than 50 million rows and scale to manage large datasets.

- Security: It features a robust data security layer, making it secure for handling sensitive information.

Disadvantages of MySQL

- Performance at Scale: MySQL’s efficiency diminishes as data scales, particularly in write-heavy or complex query scenarios.

- Limited Tooling: Compared to other databases, MySQL has fewer advanced debugging and development tools.

What is PostgreSQL (Postgres)?

PostgreSQL, also known as Postgres, is an open-source, enterprise-class object-relational database management system (ORDBMS). It supports both SQL (relational) and JSON (non-relational) querying, offering greater extensibility and adaptability than MySQL.

Advantages of Using PostgreSQL

- Performance: PostgreSQL handles complex queries and large-scale data with greater efficiency.

- Extensibility: It supports user-defined types and various procedural languages.

- ACID Compliance: PostgreSQL ensures high reliability and fault tolerance with write-ahead logging.

- Geospatial Capabilities: It supports geographic objects, ideal for location-based services and applications.

Disadvantages of PostgreSQL

- Learning Curve: PostgreSQL can be more challenging to learn and configure compared to MySQL.

- Performance at Smaller Scales: It may be slower for simple, small-scale operations.

Why Migrate from MySQL to PostgreSQL?

Database migration is a crucial process that businesses undertake to meet evolving data needs, improve performance, or reduce costs. Here are some reasons why you might consider migrating from MySQL to PostgreSQL:

- Better Performance: If your current MySQL database struggles with complex queries or large datasets, PostgreSQL may offer the performance boost you need.

- Advanced Features: PostgreSQL’s support for advanced data types and geospatial capabilities can provide more flexibility in data handling.

- Cost Efficiency: Migrating to PostgreSQL could reduce hosting and operational costs, especially if MySQL is not optimized for your use cases.

Data Migration vs Data Replication

It’s important to distinguish between database migration and replication:

- Database Migration is typically a one-time process where data is moved from MySQL to PostgreSQL with the intent to switch entirely to PostgreSQL.

- Database Replication involves ongoing data copying between MySQL and PostgreSQL, allowing you to maintain both databases in sync.

Depending on your needs, you might choose one over the other. For instance, if your application relies heavily on complex queries, migrating entirely to PostgreSQL might be beneficial. However, if you require the simplicity of MySQL for certain tasks, ongoing replication could be a better solution.

Methods to Migrate Migrate Data from MySQL to PostgreSQL

Method 1: Connecting MySQL to PostgreSQL Using Estuary

Method 2: pg-chameleon for Ongoing Replication

Method 3: pgloader for One-Time Migration

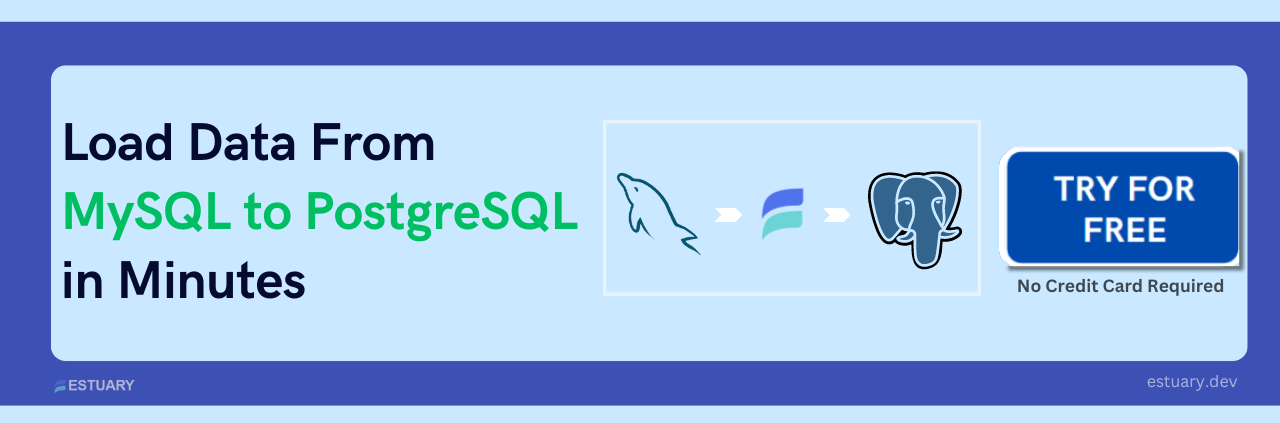

Method 1: Connecting MySQL to PostgreSQL Using Estuary

Estuary Flow is a powerful real-time data integration platform designed to simplify and optimize the replication process between MySQL and PostgreSQL. With Estuary, you can easily set up various data integrations, including MySQL to BigQuery and MySQL to Snowflake, among many other connections.

Why Choose Estuary Flow for MySQL to PostgreSQL Migration?

- User-Friendly Interface: Flow’s intuitive web application requires minimal technical expertise, making it easy to set up and manage data pipelines compared to command-line or scripted tools.

- Real-Time Data Sync: Estuary Flow performs a full historical backfill of your MySQL data into PostgreSQL, followed by real-time data transfer. This ensures that both databases remain in sync within milliseconds, minimizing data latency.

- Cost-Effective Replication: Utilizing change data capture (CDC) for MySQL, Flow captures and transfers only the changes made to your data. This method is less compute-intensive and reduces operational costs compared to traditional data migration tools.

- High Reliability: Flow ensures data resilience by storing captured MySQL data in cloud-backed collections before writing to PostgreSQL. This mechanism guarantees data integrity and offers exactly-once semantic storage, safeguarding against potential data loss.

Step-by-Step Guide: Migrating MySQL to PostgreSQL Using Estuary Flow:

Step 1: Sign Up for Free on Estuary

- Start by signing up for a free account on the Estuary platform. For large-scale production needs, you can contact Estuary for an organizational account.

Step 2: Prepare Your MySQL Database

- Log in to the Estuary web app and ensure that your MySQL database meets the prerequisites.

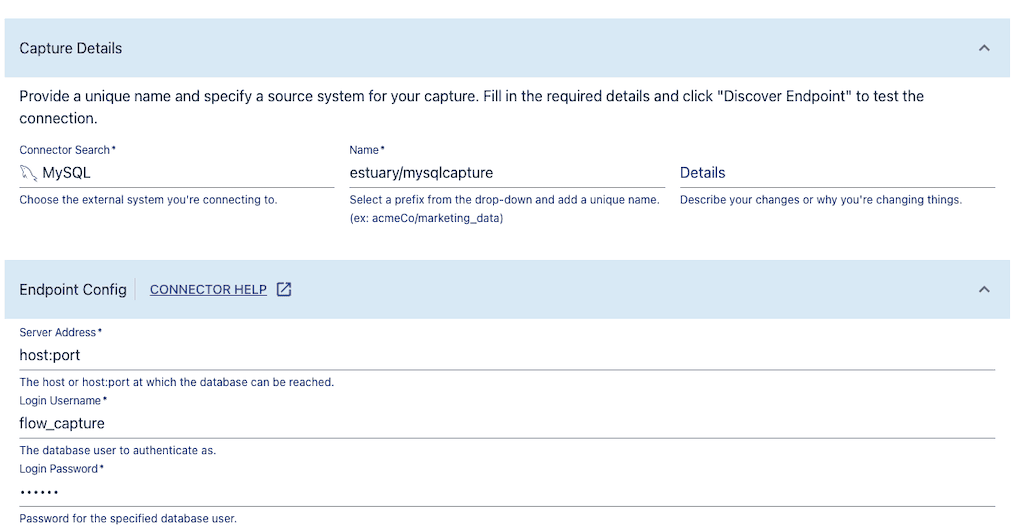

Step 3: Capture MySQL Data in Real-time

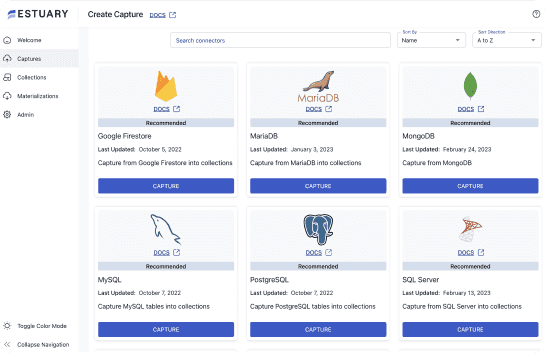

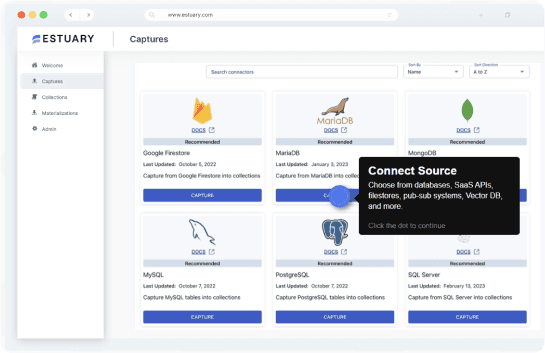

- Navigate to the Captures tab and select New Capture.

- Choose the MySQL tile and fill out the required details, including your MySQL server address, database username, and password.

- Select the tables you wish to capture and publish your settings.

Step 4: Materialize to Postgres

- Choose the PostgreSQL tile in the Materialize section.

- Provide the necessary PostgreSQL database credentials, including the database address, username, and password.

- Map the captured MySQL data to new tables in PostgreSQL and publish the materialization.

Estuary Flow will now handle the real-time data replication, ensuring that any new data in MySQL is automatically captured and replicated to PostgreSQL.

For more help with this method, see the Estuary Flow documentation on:

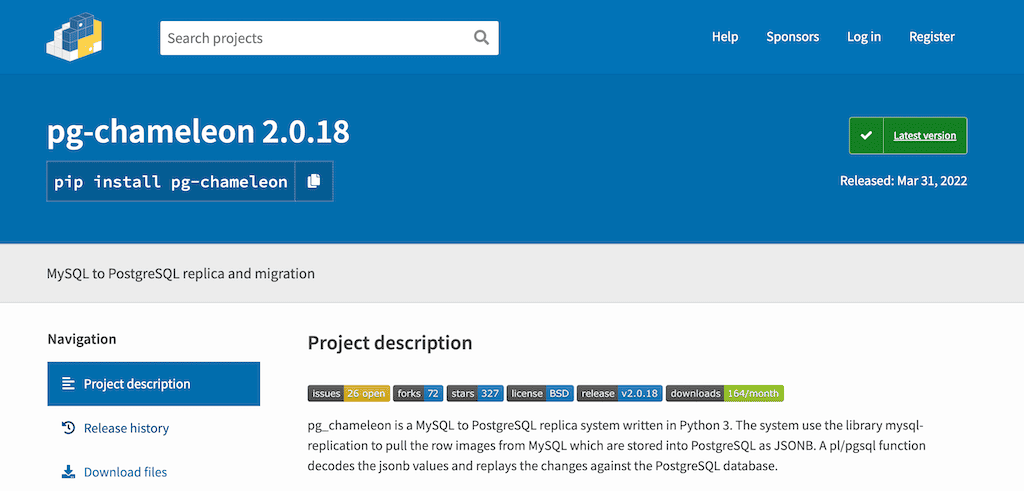

Method 2: Using pg-chameleon for MySQL to PostgreSQL Ongoing Replication

pg-chameleon is a Python tool that leverages MySQL’s native replication, converting data for PostgreSQL. This method is ideal for ongoing replication, ensuring that your data is continuously synchronized between MySQL and PostgreSQL. However, be aware that this process may introduce a slight time delay due to the nature of replication.

Method 3: Using pgloader as a MySQL to PostgreSQL Migration Tool

pgloader is a powerful command-line tool that simplifies the process of migrating data from MySQL to PostgreSQL. It is particularly suited for one-time migrations and supports a variety of data sources. Here’s how to set it up:

Step 1: Install pgloader: Easy Setup for Your Migration

pgloader is conveniently available through most package managers. Use the following commands based on your operating system:

- Debian/Ubuntu:

apt-get install pgloader - macOS:

brew install pgloader

For other systems, download the binary directly from the official pgloader website.

Step 2: Gather Database Credentials

Locate your MySQL and PostgreSQL connection details in their respective configuration files:

- MySQL: Typically found in

my.cnf(or similar). - PostgreSQL: Usually found in

postgresql.conf.

These details typically include:

- Hostname/IP Address: The server where the database is running.

- Port: The port number used for database connections.

- Username: The username with access to the database.

- Password: The password associated with the username.

- Database Name: The name of the specific database you want to migrate.

Alternatively, you might find these credentials set as environment variables.

Step 3: Fine-Tune Your Migration with a Configuration File (Optional)

For granular control and customization, create a .load file. This allows you to define how the migration process should handle various aspects:

- Data Type Mapping: Specify how MySQL data types should be converted to their PostgreSQL equivalents.

- Table Selection: Choose specific tables to migrate or exclude certain ones.

- Schema Manipulation: Control schema creation and modifications.

- Indexing: Manage index creation during the migration.

Example .load File:

plaintextLOAD DATABASE

FROM mysql://user:password@localhost/mydb

INTO postgresql://user:password@localhost/mypgdb

WITH include drop, create tables, create indexes

set MySQL variables charset = 'UTF8';Step 4: Execute pgloader: Launch Your MySQL to PostgreSQL Migration

With your connection details ready and your configuration file (optional) prepared, initiate the migration with the following command:

plaintextBash

pgloader mysql://[MySQL connection details] postgresql://[PostgreSQL connection details]

Replace the placeholders with your actual credentials.

If you created a .load configuration file, include it in the command:

plaintextBash

pgloader --load [filename.load]

For a comprehensive list of options and further configuration details, refer to the official pgloader documentation.

Choosing the Right Data Pipeline Tool

When selecting a MySQL to PostgreSQL migration tool, it's crucial to understand both the integration mechanics and the associated costs. Tools like Estuary Flow excel by utilizing real-time data synchronization rather than traditional batch processing, which helps avoid delays and the need for repeated database scans. This approach is not only more efficient but also cost-effective, especially for ongoing replication needs.

Before making a decision, ensure that the migration tool you choose aligns with your specific requirements and fits within your budget.

Conclusion

Database migration and replication is an important facet of every business’ data strategy and architecture. As requirements and use cases evolve, it’s more important than ever to have the right type of database.

MySQL and PostgreSQL each have their place and optimal use cases. Whether your goal was to permanently move from one to the other or maintain both side-by-side, we hope this guide has simplified the process for you.

For more data integration tutorials, check out the Estuary blog and documentation.

Ready to Migrate from MySQL to PostgreSQL? Start with Estuary Flow!

Migrating your data has never been easier. Sign up for a free account on Estuary today and experience the power of real-time data integration between MySQL and PostgreSQL.

Need help getting started?

- Explore our detailed documentation to kick off your migration journey, with a special focus on the "Getting Started" section.

- Join our Slack community for instant support and connect with fellow data engineers.

- Watch our Estuary 101 walkthrough to see how Estuary can simplify your data migration.

Questions? We’ve got you covered!

Feel free to contact us anytime. We’re here to help you make your data integration project a success.