Data extraction tools are the key to unlocking the full potential of the vast amounts of data that businesses encounter daily. Whether it’s hidden within web pages, stored in databases, or embedded in documents, the ability to efficiently extract and organize data is what turns raw information into actionable insights.

These tools are essential for businesses looking to streamline their data collection processes, ensuring that valuable information is gathered accurately and efficiently. But with so many options available, each offering unique features and capabilities, choosing the right data extraction tool can be a daunting task.

This guide will help you navigate the landscape of data extraction tools for 2024, providing detailed insights into the top options available today. Whether you need speed, accuracy, or flexibility, you’ll find the right tool to meet your business needs.

Let’s dive in and discover how the best data extraction tools can empower your business to make smarter, data-driven decisions.

What is Data Extraction and Its Importance in Modern Business

Data extraction is the process of retrieving data from various sources, such as databases, websites, and documents. This data is processed and analyzed to gain valuable business insights or stored in a central data warehouse.

Data can exist in different formats, such as unstructured, semi-structured, or structured. Before it can be effectively analyzed, it’s essential to standardize this data, ensuring consistency and uniformity. Data extraction tools play a pivotal role in this process, automating the retrieval and transformation of data, so businesses can focus on analyzing and utilizing the information.

Data extraction is a critical initial step in the Extract, Transform, and Load (ETL) process in the data ingestion paradigm, where data is extracted from source systems, transformed into a format suitable for analysis, and loaded into a target system. The extracted data will undergo various transformations to ensure accuracy, completeness, and consistency before being loaded into a data warehouse.

In today's data-driven world, data extraction tools are becoming increasingly important. With the vast amount of available data, it can be overwhelming to manually extract the data you need. Data extraction tools can help save time and increase accuracy by automating the process of collecting and organizing data.

These tools can load and transform data into most cloud data warehouses, catering to businesses of various sizes with different pricing models.

Why Do You Need Data Extraction Tools?

Data extraction tools are important in the process of retrieving and sorting data from various types of sources, such as databases, websites, and documents. It helps in reducing time-consuming tasks through automation, increasing accuracy levels, and changing raw data into structured data that assist businesses in arriving at reliable data-driven decisions.

Here are some key reasons why businesses rely on data extraction tools:

- Efficiency: Optimize the process of obtaining large amounts of data and minimize the amount of work done manually.

- Data Accuracy: Improve the quality of the extracted data by reducing the level of errors that may be introduced by the human factor.

- Standardization: Turn raw data into a structured format that can easily be analyzed and incorporated into other systems.

- Improved Scalability: Ensure that the growing data needs are addressed without negatively impacting performance.

- Advanced Insights: Extract meaningful information from various types of data to make informed decisions.

Types of Data Extraction Tools

There are various types of data extraction tools available, each with its own unique features and functionality. Here are some of the most common types:

- Database Extractors: Database extractors are used to extract data from databases, such as SQL databases, NoSQL databases, and other types of data storage systems.

- Web Scrapers: Web scrapers are tools that extract data from websites. They can be used to extract product data, customer reviews, pricing information, and more. Web scrapers typically work by crawling through the HTML code of a website and extracting specific data points based on predefined rules.

- API-based Extractors: API-based extractors work by connecting to an application programming interface (API) to extract data. This type of tool is commonly used for extracting data from social media platforms, e-commerce websites, and other online services.

- Cloud-Based Extractors: Cloud-based extractors are hosted on a remote server and can be accessed via the Internet. They can be used to extract data from various sources, including websites, social media platforms, and databases. Cloud-based extractors are typically more scalable and flexible than on-premise solutions.

Best 7 Data Extraction Tools to Follow in 2024

Here are some of the top 10 data extraction tools that are widely recognized for their powerful features in improving data accuracy and automating the extraction process.

With so many data extraction tools available in the market, choosing the right one can be a daunting task. In this guide, we’ll take a closer look at some of the best data extraction tools in 2024.

Estuary Flow

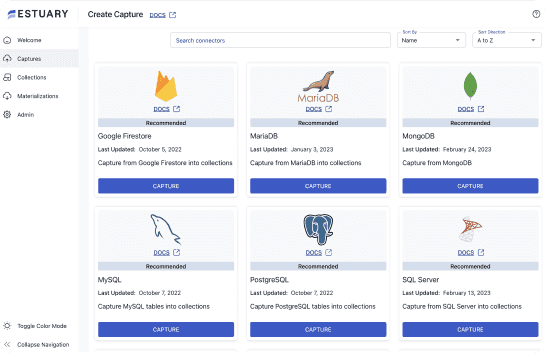

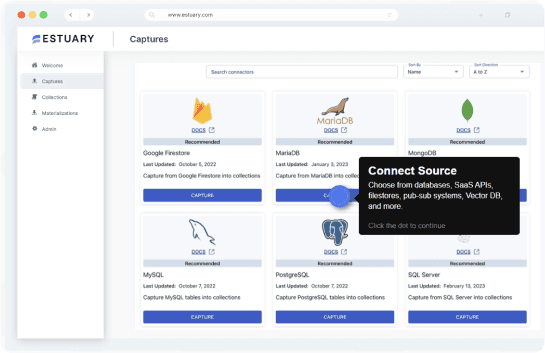

Estuary Flow is a leading data extraction tool that provides a flexible platform for real-time data extraction from databases, cloud storage, pub/sub systems, SaaS APIs, and more. It offers various features including data storage, transformation, and materialization to ensure efficient extraction of valuable data.

Estuary Flow’s powerful command-line interface gives backend engineers data integration superpowers, such as automating data ingestion, in-flight SQL transformations, and monitoring data quality. At the same time, Flow allows data analysts and other users to contribute and manage their data pipelines.

key features of Estuary Flow

- Scalability: Estuary Flow is designed to scale seamlessly as your data needs grow. The platform can handle large volumes of data and offers faster data extraction and transformation.

- Data Cleansing: Estuary Flow provides built-in data cleansing and transformation features to help you clean and enrich the data you have extracted. This feature allows you to prepare the data for analysis or integration with other systems.

- Real-time Data Streaming: Estuary Flow is built on a real-time streaming backbone. By default, data is captured, materialized, and transformed with millisecond latency.This feature ensures that your data is always up-to-date and readily available for analysis or integration.

- Data Migration: Estuary Flow supports a wide range of data sources and destinations, which can be configured through a simple drag-and-drop interface. This can be particularly beneficial to users who do not have the necessary resources or expertise to build and manage their own data pipeline.

Tip: Looking for the best Data Extraction Tool? Estuary is one of the top-performing data extraction solutions. Sign up to use it for free!

Import.io

Import.io is a cloud-based data extraction tool that allows you to extract data directly from the web. It can scrape data from web pages, highlighting the importance of extracting data from web pages for further analysis and automation. With Import.io, you can create an extractor by providing an example URL containing the data you want to extract. Once Import.io loads the webpage, it automatically identifies and presents the data it finds in a structured format.

key features of import.io

- Efficient and Reliable Data Extraction: Import.io has a built-in crawl service that supports multiple URL queries and uses dynamic rate limiting and retry systems to handle errors. This makes Import.io a highly efficient and reliable tool for data extraction.

- User-Friendly Interface: Import.io provides a user-friendly point-and-click interface that allows you to easily identify the specific data elements you want to extract without writing complex code or scripts.

- Advanced Features: Import.io offers advanced features such as extracting data from multiple websites, and scheduling automatic data extraction. It can also integrate with other tools and platforms. This streamlines the data extraction process and integrates extracted data into other systems for analysis.

Octoparse

Octoparse is a web data extraction tool that allows you to extract information from websites. It works for both static and dynamic websites, including those using Ajax, and supports various data formats for exporting data, such as CSV, Excel, HTML, TXT, and databases. Octoparse facilitates the extraction of structured data from websites, which is crucial for analysis and business decision-making. You can also choose to run your data extraction project either on your local machines or in the cloud.

Key features of Octoparse

- Extraction Modes: Octoparse offers two extraction modes: Task Template and Advanced Mode. The Task Template is ideal for new users as it provides pre-built templates for common scraping tasks. The Advanced Mode offers more features and functionalities for experienced users, such as RegEx, Xpath, Database Auto Export, and many more.

- Cloud Extraction: Octoparse's cloud extraction feature enables simultaneous web scraping at scale. You can perform concurrent extractions using multiple cloud servers and extract massive amounts of data to meet your large-scale extraction needs. Octoparse also allows you to schedule regular data extraction from various sources.

- Proxies: Octoparse enables you to scrape websites by rotating anonymous HTTP proxy servers. In Cloud Extraction, Octoparse applies many third-party proxies for automatic IP rotation. For Local Extraction, you can manually add a list of external proxy addresses and configure them for automatic rotation. IPs are rotated based on user-defined time intervals., enabling them to extract data without the risk of getting their IP addresses banned.

ScraperAPI

ScraperAPI is a user-friendly web scraping tool that simplifies data extraction from websites. It provides easy access to millions of proxies and enables bypassing of anti-scraping measures used by websites. These measures include blocking IPs, bypassing CAPTCHAs, and utilizing other bot detection methods. The tool is particularly useful for users who need to extract large amounts of data from the web efficiently. Additionally, ScraperAPI can extract and process unstructured data from various sources, including documents, PDFs, social media, and web pages.

Key features of ScraperAPI

- Large Proxy Pool: ScraperAPI has a large pool of proxies, which makes it easier to avoid getting blocked or flagged while scraping.

- Excellent Customization Options: ScraperAPI provides many customization options, making it a great tool for businesses that need to extract specific data from websites. For instance, users can customize request headers, cookies, and specify the number of retries and timeouts for each request. You can also customize your scraping requests according to your needs and extract the data you want.

- Good Location Support: ScraperAPI supports multiple locations, which means that you can scrape websites from different locations around the world. This is useful if you need to extract data from geographically restricted websites.

Hevo

Hevo is a user-friendly and reliable cloud-based data integration platform that allows organizations to automate collecting data from more than 100 applications and databases, loading it to a data warehouse, and making it analytics-ready.

Key features of Hevo

- Ease of Use: Hevo provides a user-friendly interface that allows non-technical users to set up data extraction workflows without requiring coding skills.

- Near-Real-Time Data Extraction: Hevo allows you to extract data from various sources in near-real time, providing up-to-date information for analysis and reporting. This feature ensures that the data is always current and eliminates the need for manual data refreshing.

- Automated Schema Mapping: Hevo automatically maps the source schema to the destination schema, saving the time and effort required for manual schema mapping. This feature ensures that the data is accurately and consistently transformed into the destination schema.

ScrapingBee

ScrapingBee is a web scraping tool that allows you to easily extract data from websites. It provides a robust and scalable solution for businesses and users who need to gather data from the web. By identifying and extracting relevant data from various sources, such as databases, documents, and websites, ScrapingBee helps businesses save time and gain valuable insights. ScrapingBee handles all the technical aspects of web scraping, such as handling proxies, bypassing CAPTCHAs, and managing cookies. This means you don't need advanced technical skills to use the tool effectively.

Key Features of ScrapingBee

- Ease of Use: ScrapingBee offers a user-friendly interface and a REST API, making it easy to integrate into existing workflows and systems. Additionally, it provides various features such as JavaScript rendering, automatic retries, and data export options, making it a comprehensive solution for web scraping needs.

- Reliability: ScrapingBee is known for its reliability and fast response times, which is essential for businesses that need to extract data in real time. It offers multiple data center locations across the globe, ensuring that you can access and extract data from websites with low latency.

- Keyword Monitoring and Backlink Checking: ScrapingBee offers a large proxy pool that enables marketers to perform keyword monitoring and backlink checking at scale. With rotating proxies, it reduces the chances of getting blocked by anti-scraping software.

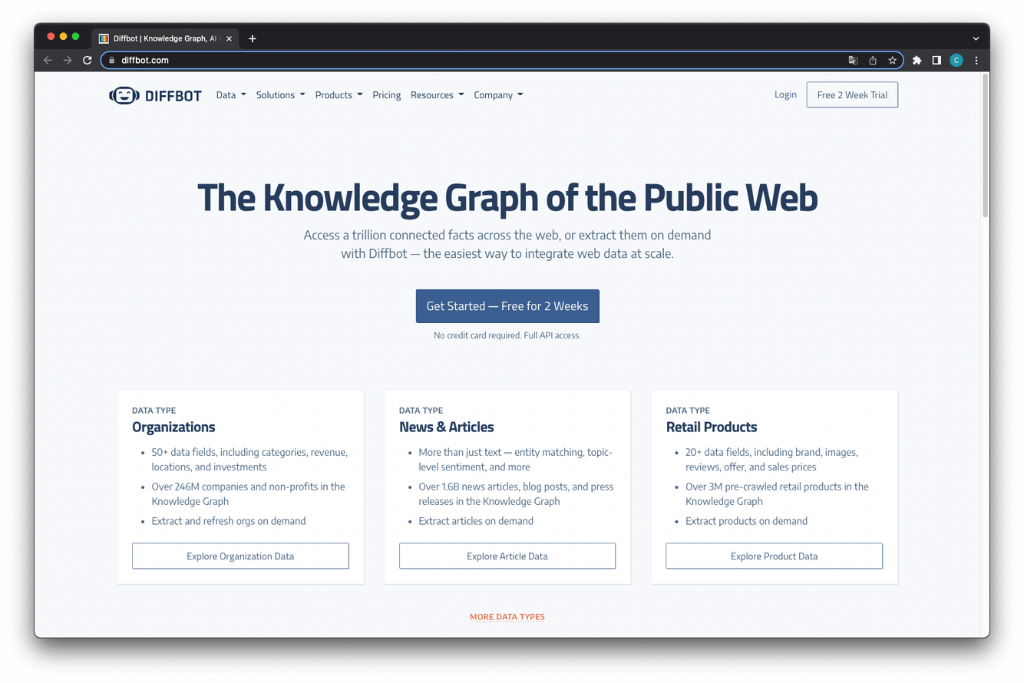

Diffbot

Diffbot is a powerful data extraction tool that excels at turning unstructured web data into structured and contextual databases, making it ideal for scraping articles, news websites, product pages, and forums. It enables users to analyze data from multiple perspectives, search for patterns, anomalies, and correlations in data sets, and make data-driven decisions.

Key features of Diffbot

- APIs for Easy Integration: Diffbot offers APIs that allow for easy integration with other applications, making it a convenient tool for businesses.

- Advanced Technical Resources: Diffbot provides advanced technical resources, such as SDKs and developer tools, to help businesses get the most out of the data.

- Support for Multiple Data Types: Diffbot can extract data from a wide range of web page types, including articles, product pages, and discussion forums.

- Data Enrichment: Diffbot can automatically identify and enrich extracted data with additional contexts, such as sentiment analysis or entity recognition.

Conclusion

Data extraction tools are critical for businesses that deal with massive amounts of data to make informed decisions. With massive amounts of data available, it can be overwhelming to manually extract the data you need. Data extraction tools can save time and increase accuracy by automating the process of collecting and organizing data.

The 7 data extraction tools we explored, Estuary Flow, Import.io, Octoparse, Scraper API, Hevo, ScrapingBee, and Diffbot—offer powerful features for extracting and handling data efficiently. Each tool offers unique features that make it suitable for specific business needs. Choosing the right tool will depend on the specific requirements and goals of the business. With the right tool in hand, businesses can extract valuable insights from data to make informed decisions that drive growth and success.

Manual data entry is often inefficient, error-prone, and time-consuming. Using automated data extraction software can significantly improve accuracy and productivity by eliminating these disadvantages.

To take advantage of real-time analytics and data management software, you need to extract your data effectively. Sign up for Estuary Flow today and quickly build data pipelines with low-code features to streamline your data flow.

FAQs

What are the top features to look for in a data extraction tool?

The most important features of data extraction tools include scalability, real-time data processing, robust data cleansing capabilities, support for a variety of data sources, and an intuitive user interface.

How do data extraction tools handle different data formats?

The best data extraction and most reliable tools are capable of processing structured, semi-structured, and unstructured data, transforming them into a standardized format for analysis and seamless integration.

What are the common challenges faced during data extraction?

Some of the challenges include managing large data volumes, handling unstructured data, ensuring data quality, and maintaining data privacy and security.

How do I choose the right data extraction tool for my needs?

It’s important to take into account your data sources, features required, budget, and scalability needs. You should choose tools based on ease of use, reliability, scalability, integration capabilities, and customer support to find the best fit for your requirements.